AI Toys or AI Tools?

In the rush to adopt artificial intelligence (AI), many organizations get caught up in the excitement of “shiny objects.” These quick wins or experimental pilots—let’s call them “AI toys”—often generate buzz but deliver limited value. In contrast, “AI tools” are purposeful solutions with a clear path to impact, designed to integrate seamlessly with organizational processes and strategy. The difference between the two can make or break an organization’s AI journey.

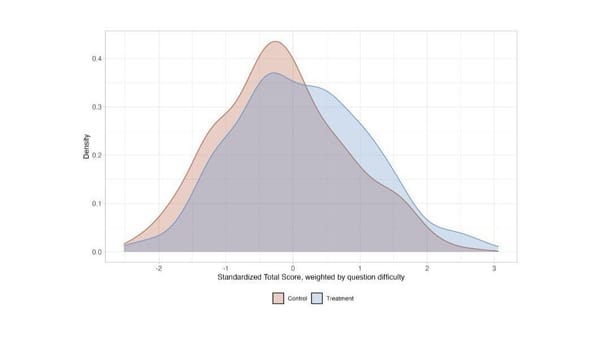

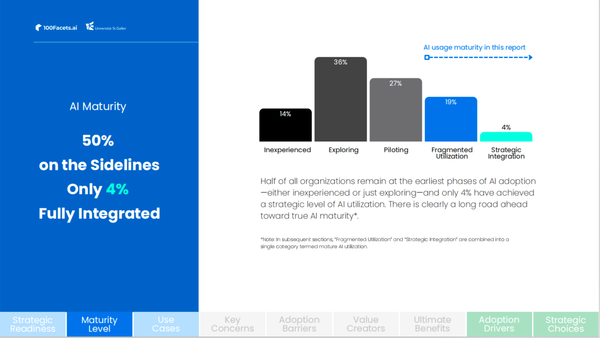

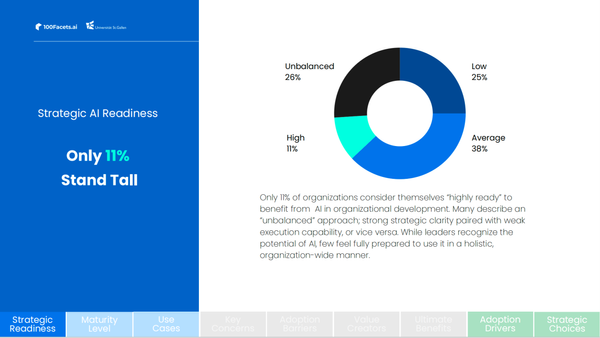

Recent study by 100facets.ai and University of St. Gallen (HSG) – Executive School on the State of AI in Organizational Report underscores this point: 57% of respondents cite lack of internal expertise in HR/L&D as a top barrier, while 50% point to lack of clearly defined use cases.

These hurdles suggest that, without a deliberate plan, organizations risk pouring resources into attention-grabbing “toys” that fail to scale. So, I decide to use this edition of the Win with AI newsletter to will walk you through why that distinction matters and how to make sure your AI projects become sustainable tools—especially during the early phases of adoption.

One of my favorite theoretical frameworks: The S-Curve of Innovation, and Why Early Moves Matter

The S-curve of innovation is a useful model for understanding how technologies mature. In the early phase, adoption is slow, often limited to innovators and early adopters. Over time, a tipping point is reached, and adoption accelerates rapidly—until the technology eventually reaches a saturation point.

In the context of AI:

1. Early Stage: Novelty is high, proven use cases are fewer, and experimentation dominates.

2. Tipping Point: Best practices emerge, infrastructure and expertise solidify, and the pace of adoption accelerates.

3. Maturity: AI is embedded into daily workflows and drives significant business outcomes.

Because the early stage is marked by experimentation, it’s tempting to roll out multiple “toys” without a clear ROE (Return on Experiment). And let me be clear, I don't use ROI in this article and go for ROE, as your first AI initiatives won't necessarily lead to a profit increase in your balance sheet. But you can still learn a lot from a thoughtful failed experiment, if it is strategically designed.

But what do I mean by AI Toys vs. AI Tools?

I tried to put some signs together to differentiate the two, though it is never easy to "predict" the future.

AI Toys are usually shiny stuff that are Novelty-driven, but lack a well-defined business problem. A lot of them are "Me too" products that build on waves of AI news (these days: Agents is the hot stuff... so be careful...)

- Risks: Consumes budget and talent with minimal impact; can generate employee frustration or disillusionment.

- Example: A chatbot that demonstrates AI capabilities but has no integration with core HR or L&D systems, resulting in minimal employee adoption.

AI Tools are solid solutions to clear problems and are designed for scalability. They usually come from Agile startups or companies with deep functional domain (i.e. in Learning, HR, Marketing, etc.) and advanced technological capabilities (LLMs, Agentic solution architecture, etc.)

- Benefits: Demonstrates tangible ROI, fosters internal capability building, and garners leadership support.

- Example: An AI-driven performance management system that integrates with existing HR platforms, uses real-time analytics, and supports targeted development plans (Let me know if you want to see a detailed case on this, we wrote a success case recently with one client).

Why Organizations Fall for Toys—and the Danger of Doing So

There is no single or simple answer to this question. Three common reasons that I have observed over the past few years of research and practice in the field of AI are:

1. Hype Over Strategy

In the early stages, flashy demos can overshadow the need for a robust business case. The 100Facets and HSG-ES report on AI in OD shows that 50% of organizations struggle with clearly defined AI use cases. Without clarity, it’s easy to invest in “cool” pilots that fail to address core business challenges.

2. Limited Expertise

With 57% of HR and L&D functions reporting a lack of internal AI expertise, teams may not know how to separate viable solutions from hype. This expertise gap can lead to adopting technology that looks sophisticated but does not solve real problems.

3. Insufficient Budget & Fragmentation

A scattered approach can lead to budget constraints—cited by 42% of respondents—and result in many half-finished projects. Worse, disconnected pilots make it harder to build a cohesive AI roadmap, further slowing adoption and ROE. So, many would say let's just do something for now...

So what? Shall we stop doing any AI?

Not at all. The only way to figure out what your organization truly needs as tools is via experimentation. It is not an abstract thought process that happens in the executive board meeting room, it is lots of smart experimentations and courageous moves that evenly gets you up in the S-Curve. Here are a few strategies to help you in this journey to the unknown:

1. Start with a Business Problem, Not the Technology: Begin by identifying specific pain points in your function or organization. To borrow an example from the OD world, Is leadership development lagging? Are you struggling to offer the right support to each leader based on what they really need? Pinpoint the issues that AI can address.

2. Secure Leadership Buy-In Early: Although "only" 20% of respondents in our study cite lack of support from senior management as a barrier, senior buy-in is crucial for securing budgets and accelerating adoption. (and here see my other article, CXOs are almost 5x more optimistic that it is clear what needs to be done with AI compared to functional experts on the ground. So, these conversations are gonna be challenging and important due to huge perception gaps)

3. Build Internal Expertise or Strategic Partnerships: Develop in-house AI fluency and form agile cross-functional groups to guide implementation. Or (in most cases), Partner up for Expertise: If internal expertise is lacking, partner with external partners who are good at what they do.

Fun real example: One organization was once buying super expensive service from a famous consulting firm (big, but very slow...) for its digital transformation project, while a few months ago that consulting firm was inviting the client organization to give them insights and thought leadership on the exact same topic!!! That was a bad idea and led to poor results. You need partners who deeply know their stuff and have real experience in delivering results, not people with fancy words who deliver really shiny slides...

4. Foster a Culture of Iteration and Feedback: Pilot projects can be valuable—but ensure they have a roadmap for expansion. For this, you need to develop a true Growth Mindset among our leaders and across the organization (let me know if you want to benefit from a quick self / 360 assessment we developed on this at 100facets)

As your organization navigates the early stages of AI adoption, it’s critical to differentiate between “AI toys” that generate buzz and “AI tools” that drive lasting impact.

The bottom line: Early experimentations with robust AI tools that focuses on real business problem can help you learn valuable lessons, fast. It will pay off exponentially as you climb the S-curve. Rather than chasing short-lived experiments because Mr. X or Ms. Y recommends it, build the foundational expertise, culture, and technology that will yield real returns—and position your organization as an AI leader for years to come.

You can download our report at https://100facets.ai/report

And stay tuned as our white paper is almost done with the final design touches and will be out soon!